300x250

ML Agents로 더욱 매력적인 게임 개발 | Unity

간단한 플래피 버드 게임에 ML-Agents 2.0를 적용해봤어요.

using UnityEngine;

using UnityEngine.Events;

using Unity.MLAgents;

using Unity.MLAgents.Sensors;

using Unity.MLAgents.Actuators;

using System;

public class BirdAgentTest : Agent

{

private Rigidbody2D rBody;

public float jumpForce;

public UnityAction onDie;

public UnityAction<int> onSetLevel;

EnvironmentParameters m_ResetParams;

private int isJump;

void Start()

{

this.rBody = this.GetComponent<Rigidbody2D>();

}

public void Init()

{

//this.jumpCoolTimeRoutine = null;

int level = Convert.ToInt32(this.m_ResetParams.GetWithDefault("wall_height", 0));

this.onSetLevel(level);

}

public override void OnEpisodeBegin()

{

Init();

}

public override void Initialize()

{

this.m_ResetParams = Academy.Instance.EnvironmentParameters;

}

public override void CollectObservations(VectorSensor sensor)

{

// 관찰

sensor.AddObservation(this.transform.localPosition.y); // 내위치 1

}

public override void OnActionReceived(ActionBuffers actions)

{

//행동, 보상

var discreteActions = actions.DiscreteActions;

int isJump = discreteActions[0];

if (this.transform.localPosition.y <= -5.0f || this.transform.localPosition.y >= 5.6f)

{

this.Die();

}

if (isJump == 1)

{

this.Jump();

isJump = 0;

}

AddReward(0.1f);

}

public override void Heuristic(in ActionBuffers actionsOut)

{

var discreteActions = actionsOut.DiscreteActions;

if (Input.GetKey(KeyCode.Space))

{

discreteActions[0] = 1;

}

else

{

discreteActions[0] = 0;

}

this.onSetLevel(0);

}

private void Jump()

{

this.rBody.velocity = Vector3.up * this.jumpForce;

}

private void Die()

{

AddReward(-1f);

this.onDie();

EndEpisode();

}

private void OnTriggerEnter2D(Collider2D collision)

{

this.Die();

}

}

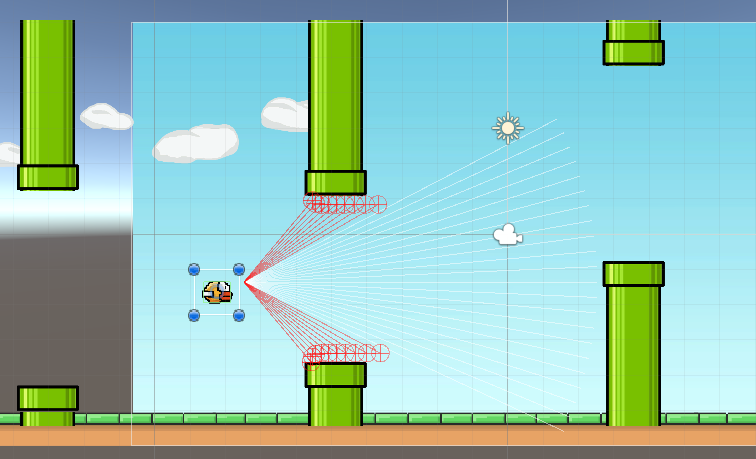

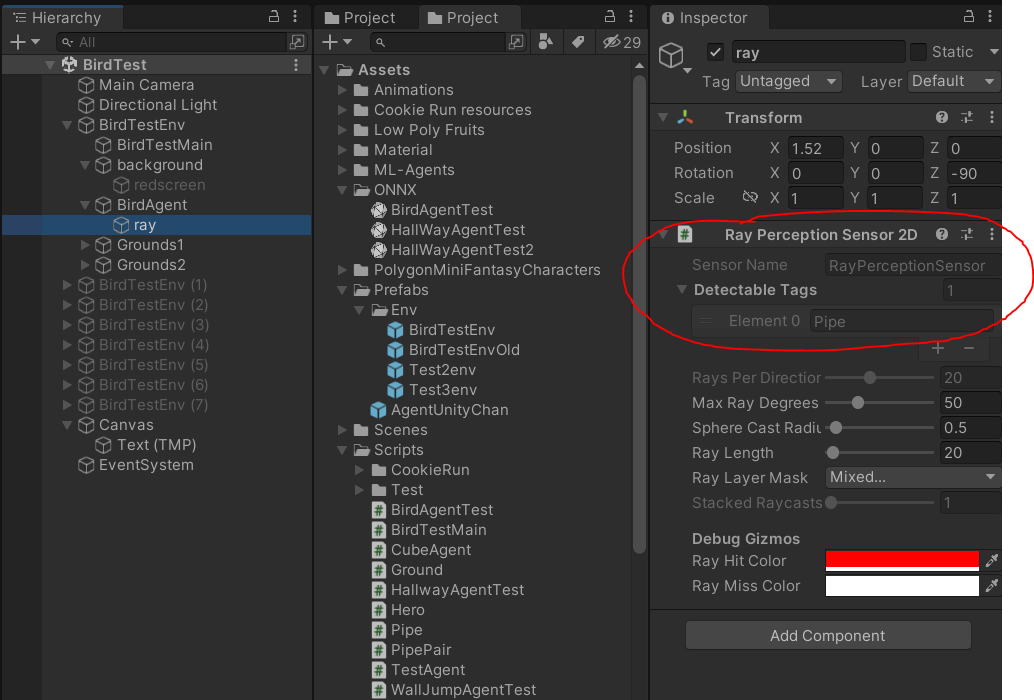

에이전트에겐 this.transform.localPosition.y 즉 자신의 y값과

움직이는 파이프 들에 레이를 통해서 감지할수있는 박스콜라이더를 달아 주었고 태그를 Pipe로 입력해줘서 최대한 파이프에 충돌을 안하며 생존할 수 있도록 학습시켰어요.

에이전트는 Jump 메소드만을 사용할 수 있어서 점프타이밍만 조절하면서 생존하는 기능이기 때문에 비교적 쉽게 학습이 된 거 같아요.

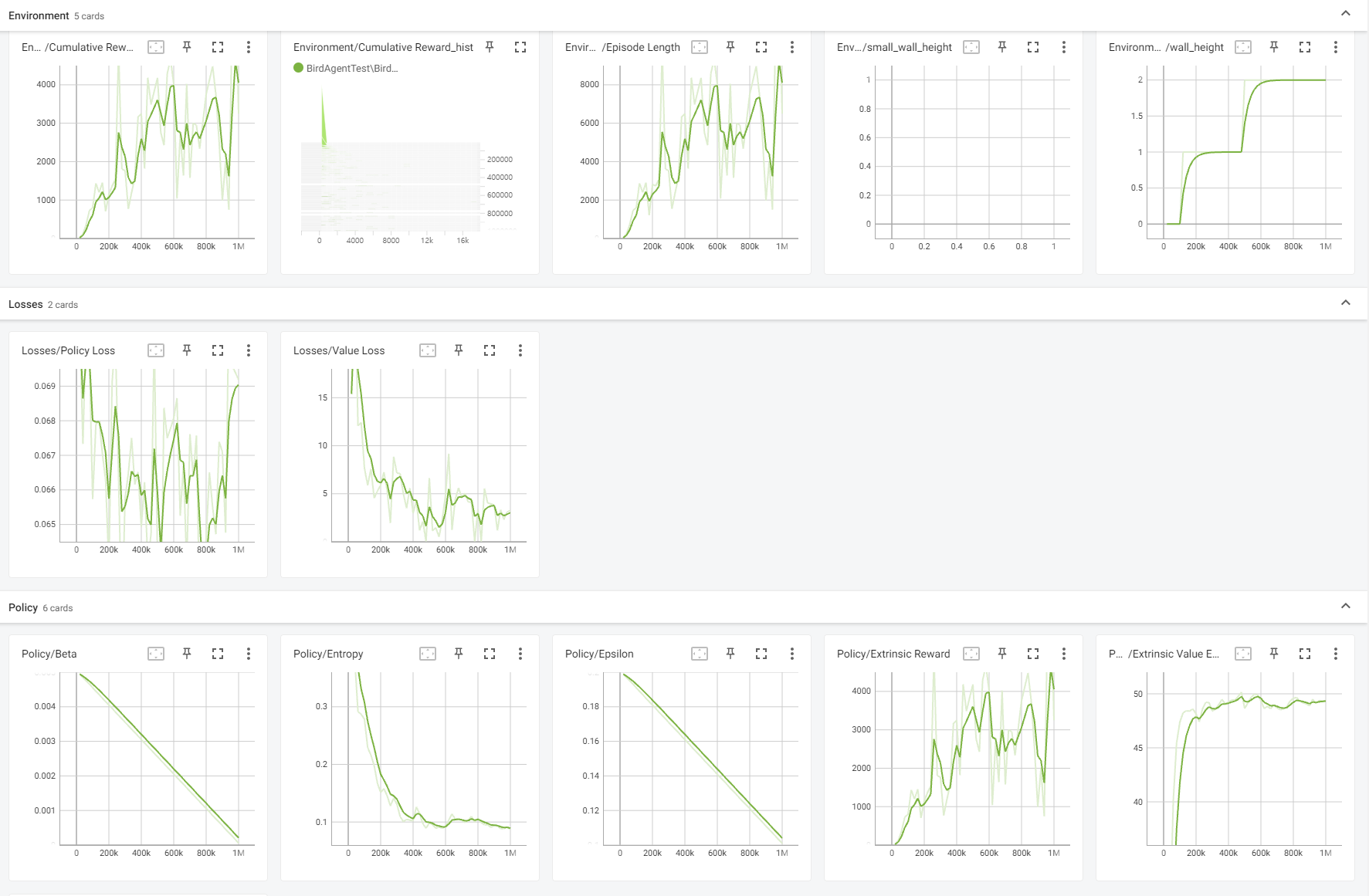

아래는 총 100만 회 학습시켰고 단계별로 난이도를 조절해가며 학습시켜 봤어요.

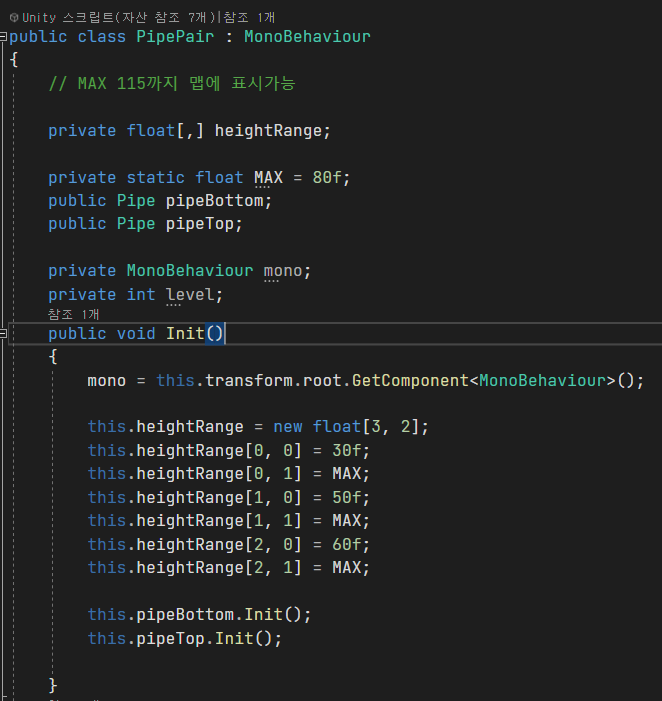

난이도를 조정하는 부분인데 처음에는 위아래 파이프 간격이 넓다가 점점 간격이 좁아지는 형식으로 학습시키는 코드에요.

BirdAgentTest.yaml

behaviors:

BirdAgentTest:

trainer_type: ppo

hyperparameters:

batch_size: 128

buffer_size: 2048

learning_rate: 0.0003

beta: 0.005

epsilon: 0.2

lambd: 0.95

num_epoch: 3

learning_rate_schedule: linear

network_settings:

normalize: false

hidden_units: 256

num_layers: 2

vis_encode_type: simple

reward_signals:

extrinsic:

gamma: 0.99

strength: 1.0

keep_checkpoints: 5

max_steps: 1000000

time_horizon: 128

summary_freq: 20000

environment_parameters:

wall_height:

curriculum:

- name: Lesson0 # The '-' is important as this is a list

completion_criteria:

measure: progress

behavior: BirdAgentTest

signal_smoothing: true

min_lesson_length: 100

threshold: 0.1

value: 0.0

- name: Lesson1 # This is the start of the second lesson

completion_criteria:

measure: progress

behavior: BirdAgentTest

signal_smoothing: true

min_lesson_length: 100

threshold: 0.3

value: 1.0

- name: Lesson2

completion_criteria:

measure: progress

behavior: BirdAgentTest

signal_smoothing: true

min_lesson_length: 100

threshold: 0.5

value: 2.0300x250

'[개인공부] > Unity' 카테고리의 다른 글

| [Unity] Cloud저장 에러 (2) | 2022.11.02 |

|---|---|

| [Unity] Admob 테스트 (6) | 2022.10.31 |

| [Unity 2D] Player 이동 (A*, 에이스타알고리즘 적용) - 작성중 (0) | 2022.08.14 |

| [Unity 2D] error CS0122: 'EBrushMenuItemOrder' is inaccessible due to its protection level (0) | 2022.08.11 |

| [Unity 2D] 캐릭터 마우스 이동 및 체력바 구현하기 (0) | 2022.08.08 |